The journey to Enterprise AI success begins with data—but not just any data architecture. Traditional data warehouses are rigid, and data lakes lack structure. Both struggle to support scalable, production-grade AI model development.

This is where Data Lakehouse architecture emerges as a game changer. It blends the scalability of data lakes with the reliability of data warehouses, enabling seamless data engineering, model training, and real-time inference from one unified platform.

As more enterprises shift to data-centric strategies, adopting a lakehouse foundation becomes critical. According to Databricks, 74% of data scientists still spend most of their time on data prep and management. With a Data Lakehouse, those bottlenecks dissolve—freeing teams to build smarter AI faster.

In this blog, we’ll explore the architecture, technical value, and use cases of lakehouses in powering enterprise-scale AI, with insights into tools like SAP BTP and strategic links to implementation-ready AI platforms.

Here’s What You Will Learn in This Blog

What Is a Data LakeHouse?

A Data Lakehouse is a unified data architecture that merges the capabilities of both data lakes and data warehouses. It retains the flexibility of schema-on-read from lakes while introducing governance, performance, and structure typically found in warehouses.

Core Features Include:

● Support for open table formats (e·g·, Delta Lake, Apache Iceberg).

● Real-time data processing via streaming engines like Apache Spark or Flink.

● Built-in metadata and governance layers for auditing and lineage.

● ML-friendly storage for structured, semi-structured, and unstructured data.

By offering a single system for data ingestion, transformation, storage, and AI development, lakehouses reduce architectural complexity and improve data availability.

Data Lake vs Data Warehouse vs Data Lakehouse

Let’s break it down:

| Feature | Data Lake | Data Warehouse | Data Lakehouse |

| Schema | Schema-on-read | Schema-on-write | Flexible+ hybrid |

| Governance | Minimal | Strong | Strong, scalable |

| Performance | Variable | High for queries | Tuned for ML+BI |

| ML-readiness | Poor | Moderate | Excellent |

| Cost Structure | Low upfront | Expensive Infrastructure | Otimized for scale |

| Use Cases | Strong, archives | Reporting, analytics | AL,ML, real-time analytics |

Stat to note: Enterprises adopting lakehouse saw a 30% reduction in total cost of ownership compared to managing data lakes and warehouses separately.

Data Lakehouse for AI Model Development

A key challenge in AI model development is accessing clean, governed data fast· Lakehouses streamline this by offering:

Unified storage for both training and inference datasets.

Support for ACID transactions, ensuring model reproducibility.

Real-time ingestion (via Kafka, Flink) for streaming-based models.

Simplified feature store integration, accelerating model training.

Direct consumption by notebooks and ML pipelines.

For example, in fraud detection models, streaming data from transaction logs processed in a lakehouse reduces inference latency by up to 90%, enabling real-time blocking and alerts.

Enterprise AI Use Cases Powered by Lakehouse

Lakehouses are ideal for powering advanced AI initiatives across industries:

01

Churn Prediction

Train models on historical behavior + real-time interaction logs from web, CRM, and email systems – all available in a unified store.

02

NLP & Document Intelligence

Store semi-structured and unstructured documents (PDFs, emails), apply AI models using Spark NLP and serve insights instantly.

03

Predictive Maintenance

Ingest IoT sensor data continuously, combine with historical failure logs to forecast machine downtime.

Each of these use cases becomes easier to manage, scale, and iterate when backed by the lakehouse approach.

SAP BTP + Data Lakehouse Integration

SAP’s Business Technology Platform (BTP) is a robust enabler of Enterprise AI with capabilities that align perfectly with lakehouse principles.

What SAP BTP Offers:

● SAP Data Intelligence to orchestrate and govern lakehouse data pipelines.

● AI Core and AI Foundation for scalable ML model development.

● Integration with data lake formats like Delta, Parquet, and Apache Hudi.

● Low-code tools to connect SAP and non-SAP sources with lakehouse storage.

Using SAP BTP, teams can operationalize machine learning faster and more securely—thanks to built-in data lineage, security, and scalability.

Explore SAP BTP’s Intelligent AI Stack

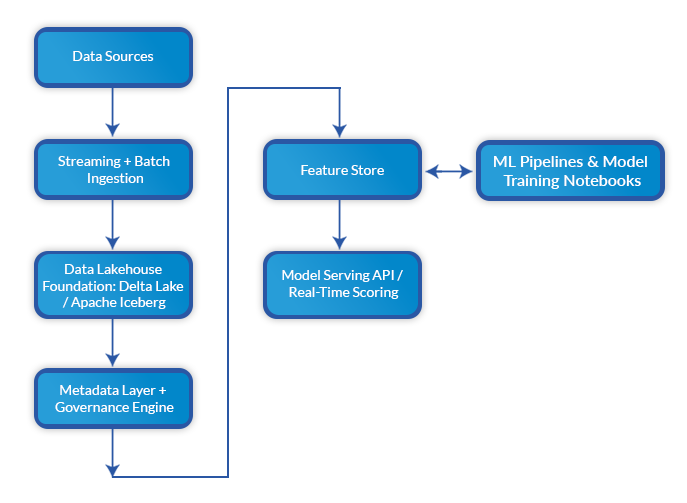

Technical Architecture Snapshot

Here’s a high-level view of an Enterprise AI + Data Lakehouse architecture:

This end-to-end flow empowers data scientists, engineers, and analysts to collaborate efficiently without duplication or data silos.

Enabling AI-Driven Teams Internally

Lakehouses don’t just help AI teams—they empower the whole data ecosystem:

● Data Scientists: Build features and train models faster with less data wrangling.

● Engineers: Deploy and monitor AI services using structured APIs.

● Governance Teams: Use metadata for auditing, explainability, and compliance.

How Nsight Enables Enterprise AI Initiatives

Benchmarks and Results

Organizations that adopted a lakehouse architecture reported:

● 2x acceleration in model training cycles.

● 40% decrease in pipeline breakage.

● Improved SLA compliance, with >99·5% model availability in production.

● 30% overall cost reduction in infrastructure and ETL.

In contrast, traditional data lake + warehouse setups suffered from poor real-time readiness, excessive data duplication, and longer iteration cycles.

Conclusion: Why Lakehouse Is Foundational to Enterprise AI

As data complexity grows, enterprise teams need more than just scalable storage—they need intelligent, integrated platforms that power AI model development and streamline end-to-end workflows.

The Data Lakehouse architecture does just that. It supports hybrid data types, real-time analytics, and advanced machine learning pipelines, all while maintaining governance and reducing cost.

Platforms like SAP BTP take this one step further by embedding automation, security, and orchestration—making it easier to deploy AI at scale across an enterprise.

Whether you’re building customer segmentation models or scaling document intelligence pipelines, your success hinges on how fast and reliably you can move data to insight. And for that, the lakehouse isn’t optional—it’s essential.

Ready to modernize your AI stack?

Download our Data Lakehouse Assessment Guide or speak to an expert about integrating SAP BTP with your AI roadmap.

About the Author

Deepak Agarwal, a digital and AI transformation expert with over 16 years of experience, is dedicated to assisting clients from various industries in realizing their business goals through digital innovation. He has a deep understanding of the unique challenges and opportunities, and he is passionate about using cutting-edge technologies to solve real-world business problems. He has a proven track record of success in helping clients improve operations, increase efficiency, and reduce costs through emerging technologies.