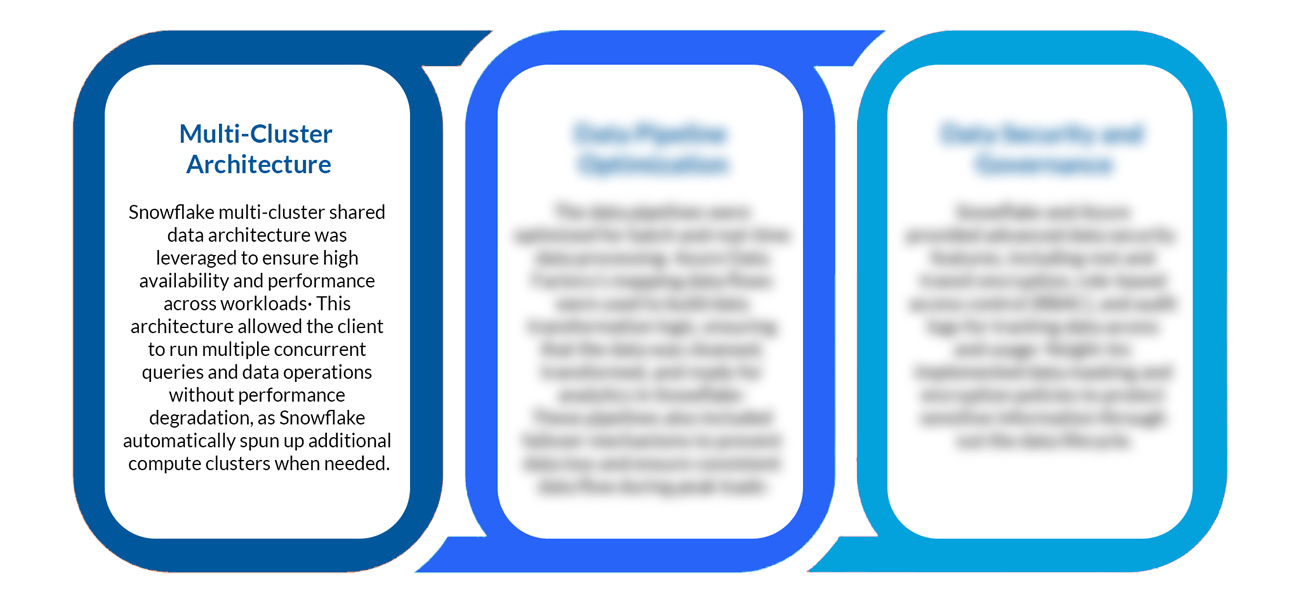

Tech Focus: Architecture, Data Flow, and Pipeline Configuration

It was a desperate situation at Global Machinery Corporation, where the data environment was mishmash. Their systems were a mix of HANA, Oracle, SQL Server, and Cloudera. All of these disparate sources resulted in inefficient, expensive business intelligence (BI) operations.

Snowflake Tenant Setup

Nsight-Inc configured the client’s dedicated Snowflake environment (tenant) as the primary

Go to sectionAzure Blob Storage Configuration

Data encryption was applied to all stored data to ensure compliance with security and governance.

Go to sectionVirtual Machine Usage

The VMs were also used to run custom scripts and integration tasks using Azure Data Factory.

Go to sectionTech Focus: Architecture, Data Flow, and Pipeline Configuration

Nsight architected a streamlined approach to data flow that took into account moving data from the client’s multiple legacy systems into Snowflake. Azure Data Factory was used to schedule the Extract, Transform, Load (ETL) processes in this flow. Data from HANA, Oracle, Cloudera and SQL Server was extracted first, processed and loaded into Snowflake for centralization.

Integration Snowflake with Microsoft Azure Services

In order to get the maximum benefit from Azure integration, Nsight built a solution which integrated Snowflake with Azure Data Factory, Blob Storage, and Azure Data Lake Gen 2. Azure VMs, SSD storage, and Azure Container Services were deployed for improved data flow and processing.

The orchestration of data pipelines was a paramount task of Azure Data Factory enabling the client to coordinate the transfer of data between on-person to the cloud. Power BI was leveraged for top-level data visualization, real-time commercial intelligence collection across sections.

Technical setup and integration